- AI For Business

- Posts

- OpenAI's "Code Red" Response To Google's Gemini 3 For App Coding Is Called Garlic 🧄

OpenAI's "Code Red" Response To Google's Gemini 3 For App Coding Is Called Garlic 🧄

Some say it's code name for GPT-5.5

Good morning!

So I've been hearing some crazy stuff about OpenAI lately.

OpenAI, the company behind ChatGPT is apparently dropping a "code red" response to Gemini 3 and Claude Opus 4.5. The new model's code name? "Garlic."

Yeah, I'm not making this up. They literally called it Garlic.

It’s in the news everywhere

And it's probably going to be GPT-5.5.

If you're building apps with AI like I am, this is a big deal. Like, really big.

Let me break down what I'm hearing.

Here's What We Know So Far:

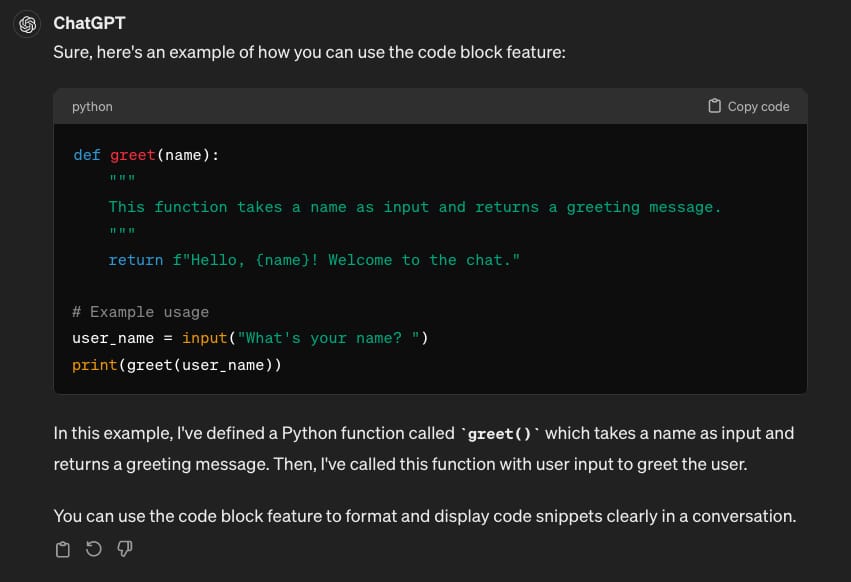

1. Coding Performance: Who Can Code Better With Less Bugs?

Okay, so we're going to compare three AI models here: Gemini 3, Claude Opus 4.5, and OpenAI's Garlic.

The big question: Who writes the cleanest code with the fewest bugs?

Let's start with the popular kid on the block.

Gemini 3 gets tons of hype for building entire apps in one shot, which sounds amazing…

But here's the catch.

Strong Points:

Super fast (generates code in under 30 seconds for simple apps) - which is great for prototyping apps

Really good at understanding plain English

Handles images, voice, and text all at once

Gemini 3's Problems:

Creates 7 bugs per app on average 3 of those are critical bugs (your app literally breaks)

Falls apart when your app has more than 20 functions

Makes logic errors 40% of the time in complex projects

Can't fix its own mistakes without you holding its hand Gemini 3's

Claude Opus 4.5's Strong Points:

Only makes 2 small bugs per app (and they're usually easy to fix)

Has a 200K token memory (remembers way more of your conversation)

Catches 85% of errors before you even see them

Can handle apps with 50+ functions like it's nothing

Claude Opus 4.5's Problems:

Hard to get access to (waitlists, API limits, expensive)

Slow during busy times (2-3 minutes to respond sometimes)

Costs 3x more than GPT-4 Not even available in a lot of countries

So Opus 4.5 is great... if you can actually use it and afford it.

OpenAI's "Garlic" is supposed to blow both of them away.

Emphasis on the word “supposed“.

What We're Hearing About Garlic:

Should make 0 to 1 bugs per app (basically perfect first try)

Even bigger memory than Opus 4.5 (rumored 300K+ tokens)

Built for enterprise apps with hundreds of functions 17% better at coding than

Gemini 3 (early benchmarks)

Way faster than Opus 4.5 (responses in under 15 seconds)

If this is true? Game over.

2. Context Window And Memory: Who Can Remember Better?

Alright, next comparison: memory and context.

We're looking at Gemini 3, Opus 4.5, and Garlic again. Who remembers what you're building better?

Context loss is super frustrating. It's when the AI just... forgets what you said 10 messages ago.

Gemini 3's Memory Problems:

Starts forgetting after 15-20 messages

If you're building something complex, it "forgets" features you asked for

You end up repeating yourself over and over Forces you to start fresh 30% of the time

Opus 4.5 is better, but not perfect:

Can go 40-50 messages before it starts losing track

Remembers 75% better than Gemini 3 But in long sessions (100+ messages), it still forgets stuff If you ask it to write 10,000+ words, accuracy drops 20%

Garlic's Expected Memory:

Built for 100+ message conversations without forgetting

Smart memory that remembers key decisions you made earlier

Can write 20,000+ words without dropping quality

Has a "checkpoint system" so you can jump back to earlier versions

Translation: You won't have to repeat yourself. It actually remembers what you're building.

That's huge.

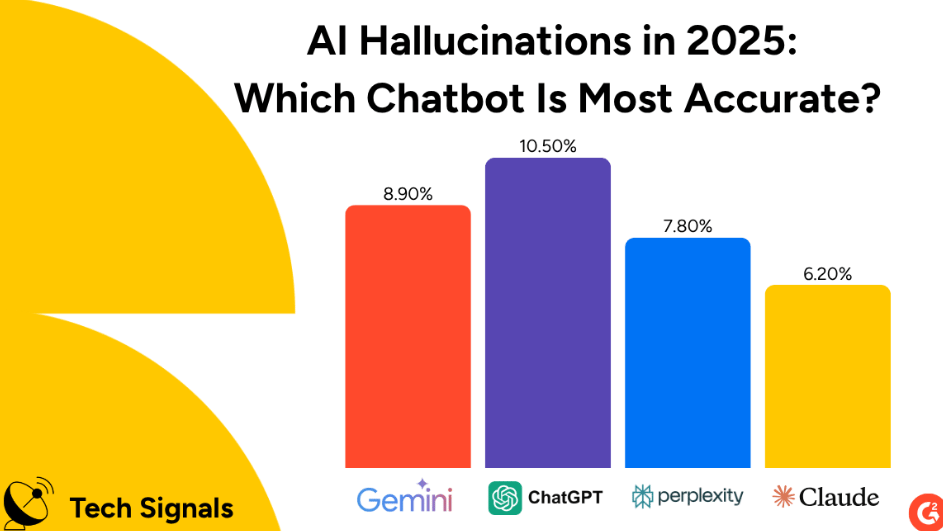

3. Hallucinations: Who Makes Things Up Less?

Last comparison: hallucinations.

This is when the AI makes stuff up and acts like it's 100% correct. It's a massive problem.

We're comparing Gemini 3, Opus 4.5, and Garlic on who lies to you the least.

Gemini 3's Hallucination Problem:

Hallucinates MORE than Gemini 2.5 (which is weird for an "upgrade")

Makes up fake code libraries 22% of the time

Invents functions that don't exist in 1 out of 5 answers

Gives wrong API info 18% of the time

Opus 4.5's Hallucination Stats:

Opus 4.5 (smart version): estimated 40-50% accuracy on hard tasks

Makes fewer mistakes than GPT-5 in smart contract tests

Users say it doesn't hallucinate on short, simple answers but extended thinking mode still makes stuff up sometimes

Garlic's Expected Hallucination Rate:

Expected under 15% hallucination rate (best in class)

Has a fact-checking system that verifies code before showing you 90%+ accuracy on technical docs

Gives you "confidence scores" so you know when it's unsure

Meaning way less AI confidently lying to you. It'll actually admit when it doesn't know something.

That alone is worth it.

But with that said, I’d still take these reports with a grain of salt since nothing is final until they actually released it, and we get to try it out ourselves.

Oh, by the way, Garlic is supposed to release publicly today or tomorrow

Expect tools like Lovable are going to adapt Garlic immediately.

So what does that mean for you?

3-5x faster app building - apps that took 2 hours to polish now takes 30 minutes

80-90% fewer bugs - from 7 bugs per app down to 0-1 50%

less back-and-forth - get it right the first time instead of going through 5+ revisions

2x more features working correctly right away

Save 10-15 hours per week you'd normally waste debugging and re-explaining stuff

If you're using Lovable, Bolt, or V0, this upgrade could mean finishing in one afternoon what used to take all day.

No joke.

Talk soon,

Brian

Reply